User Tip: Don't know what to say? Ask the bot about Ethan Turon, or ask it how to organize a digital product's roadmaps. If you see a blank message returned, just resend your message.

7/27 Update: I spent about 10 hours solid working on my AI bot today, to give it memory, to give it the ability to retrieve data from a data lake I build, and various infrastructural quality improvements. I have successfully taught myself and executed prototype RAG functions, which is awesome. Retrieval Augmented Geneartion (RAG) is the whole basis of utilzing AI to parse, summarize, and utilize various data types for research and advanced analytical operations What I'm really having trouble with is sending back this "streaming data" to webpage so the AI responses are displayed to you the user. Since the AI bot is thinking on it's own, the resposnes are not hard coded or pre-determined in any way, but generated live. So, I have to create a path back to the website for that data stream. I'm on the 15th version of my AI's codebase, but since I'm still struggling, I'm going to get something to eat and step away to formulate my solutions. I'm also beginning to run into memory issues with the bot being served on this laptop because my codebase is taking up the whole machine. My code is the bot, and the machine with data is the brain.

7/28 Update: After a good 4 months and these last 20 hours of research and development, my first productionalized Business-Ready, RAG-enabled AI Web Bot is live. I even optimized my API for this low-power laptop to reduce the AI's response time from upwards of 5 minutes for a complicated response with NO RAG, to about a minute or less WITH RAG enabled. I'm still limited by careful, manual proxy management for this web-enabled chat, but as long as my server is online, and as long as I keep this laptop plugged in, the bot should be live. Now, I can keep making quality improvements and implementing new features such as batch and chronology processes. I can also keep training the AI on any customized content, as well.

Latest Update: I added a chron job and heartbeat checker to keep my server live on it's own. It also pings itself so it doesn't time-out. These aren't perfect systems, of course - but it's customized and I'm using 20 years of full-stack corporate IT experience to develop enterprise-ready architecture and digital products of the future, on my own. I have also created version history and documented my Win11 workflow so that I can teach others how to build custom Agentic AI LLMs. I guess I can start porting workflow to linux, next.

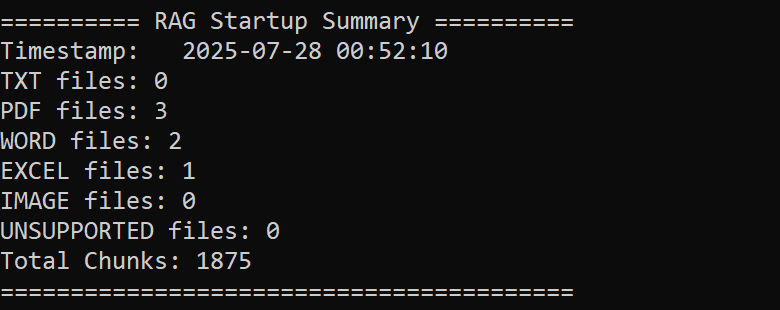

This image above is of starting my RAG server successfuly for the first time. I designed the output interface to know how many chunks are created. Chunks are smaller parts of the data pool for Agent LLMs which the LLM trains upon, learns from, and parses for query responses. For simplicity, think of chunks like "memories". The more memories there are, the more experience the AI can leverage. The AI can also be an expert on particular domains by training upon specifically curated data pools concentrating upon any one subject. It's like sending your AI to college.

This image above is of the AI training itself. What we see as incoherent gibberish is then translated into text on the screen as a response to your chat with this custom AI. The AI is generating it's own thoughts and responses based on the query and a data pool.

8/25 Update: The vector store refresh button works, chat responds faster with server side optimizations, and I added debugging, logging and error handling. Now I have to implement QoL.

I have also invented Monologue Mode, whereby the AI Bot talks about whatever it wants on it's own - but can be tuned to particular topics or many topics. Maybe I can incorporate a gibberish meter. I'm developing Monologue Mode as you read this.